A better way to prompt LLMs in Elixir

In this blog post I explain why I created a new sigil, ~LLM, and introduce you to a new hex package called :ai that contains AI helpers. Want to try it? Check out https://hexdocs.pm/ai.

If you're like me, you're probably using AI in your Elixir apps. In fact, I now by default install two deps in every new Phoenix project:

defp deps do

[

{:replicate, "~> 1.1.1"},

{:openai, "~> 0.5.2"},

]

end

But now I have a new one to add to this list — https://hexdocs.pm/ai — and this post is about why.

The OpenAI client is great — it's a community maintained wrapper around the OpenAI API. It's a great way to get started with the GPTs. However, the syntax can get tedious. Here's how you can use it to write conversation between a user and an assistant:

OpenAI.chat_completion(

model: "gpt-3.5-turbo",

messages: [

%{role: "system", content: "You are a helpful assistant."},

%{role: "user", content: "Who won the world series in 2020?"},

%{role: "assistant", content: "The Los Angeles Dodgers won the World Series in 2020."},

%{role: "user", content: "Where was it played?"}

]

)

This returns an {:ok, map} tuple that looks like:

{:ok,

%{

id: "chatcmpl-7zSQ5oyW0WRLcTuDP3fharswkK0Ml",

usage: %{

...

},

created: 1694881609,

choices: [

%{

"finish_reason" => "stop",

"index" => 0,

"message" => %{

"content" => "The 2020 World Series was played at Globe Life Field in Arlington, Texas.",

"role" => "assistant"

}

}

],

model: "gpt-3.5-turbo-0613",

object: "chat.completion"

}}

This works well, and lines up perfectly with the OpenAI chat completions API. But it gets tedious (and ugly) writing all those map, and then parsing those results (I don't care about all that metadata! Just give me the results!).

Luckily, in Elixir we have a powerful tool in sigils. Even more luckily, José Valim recently added multi-character sigils. Recently that I discovered you can write your own sigils.

So, I've created a new ~LLM sigil. ~LLM takes a string and convert it into the map format that OpenAI expects. I've been using this in all my projects now, and it really cleans up the code. It's more readable and editable, takes up fewer lines, and most importantly, it looks cleaner 😉

With we can prompt the same conversation with:

~LLM"""

model: gpt-3.5-turbo

system: You are a helpful assistant.

user: Who won the world series in 2020?

assistant: The Los Angeles Dodgers won the World Series in 2020.

user: Where was it played?

"""

|> OpenAI.chat_completion()

That's much better! It works by looking for the model, system, user and assistant keywords, and parses the string into format required by OpenAI. The only requirement for the sigil is that the first line must be the model. After that, you can add as many lines as you want, and they will be parsed into the correct format. It works as a single quote too — no need for line breaks. This works too:

iex> ~LLM"model:gpt-3.5-turbo user: how do I build an igloo in 10 words?"

[

model: "gpt-3.5-turbo",

messages: [%{role: "user", content: "how do I build an igloo in 10 words?"}]

]

We can go a step further though. When I run a chat completion, I'm not interested in the metadata, or parsing out the choices list. Look at what OpenAI returns by default:

iex> ~LLM"model:gpt-3.5-turbo user: how do I build an igloo in 10 words?" |> OpenAI.chat_completion()

{:ok,

%{

id: "chatcmpl-7zSc1rsCXpyALMjM9MkaF077xYRot",

usage: %{

"completion_tokens" => 10,

"prompt_tokens" => 19,

"total_tokens" => 29

},

created: 1694882349,

choices: [

%{

"finish_reason" => "stop",

"index" => 0,

"message" => %{

"content" => "Compact and stack snow blocks in a dome shape.",

"role" => "assistant"

}

}

],

model: "gpt-3.5-turbo-0613",

object: "chat.completion"

}}

Yuck! I just want the response! So I have a helper function that parses the response for me:

iex> ~LLM"model:gpt-3.5-turbo user: how do I build an igloo in 10 words?" |> chat()

{:ok, "Stack blocks of compacted snow in a dome shape for igloo."}

iex> ~LLM"""

model:gpt-4 system: you hate the movie the babadook. It's subtle though.

user: what do you love about the movie the Babadook?

"""

|> chat()

{:ok, "While I personally didn't enjoy The Babadook, I can recognize that it is a well-crafted film. The acting performances, particularly from Essie Davis, are strong and convincing. The movie is also praised for its nuanced portrayal of grief and depression. Additionally, it employs creative and minimalistic special effects to maintain an eerie, suspenseful atmosphere. But overall, it wasn't my favorite."}

This is much cleaner. It also handles error states:

iex(13)> ~LLM"model:gpt-3.5-turbi user: how do I build an igloo in 10 words?" |> chat()

{:error, "The model `gpt-3.5-turbi` does not exist"}

Installation

Want to use the ~LLM sigil in your own apps? I made a package to make this easy — just add ai to your list of deps.

def deps do

[

{:ai, "~> 0.3.3"}

]

end

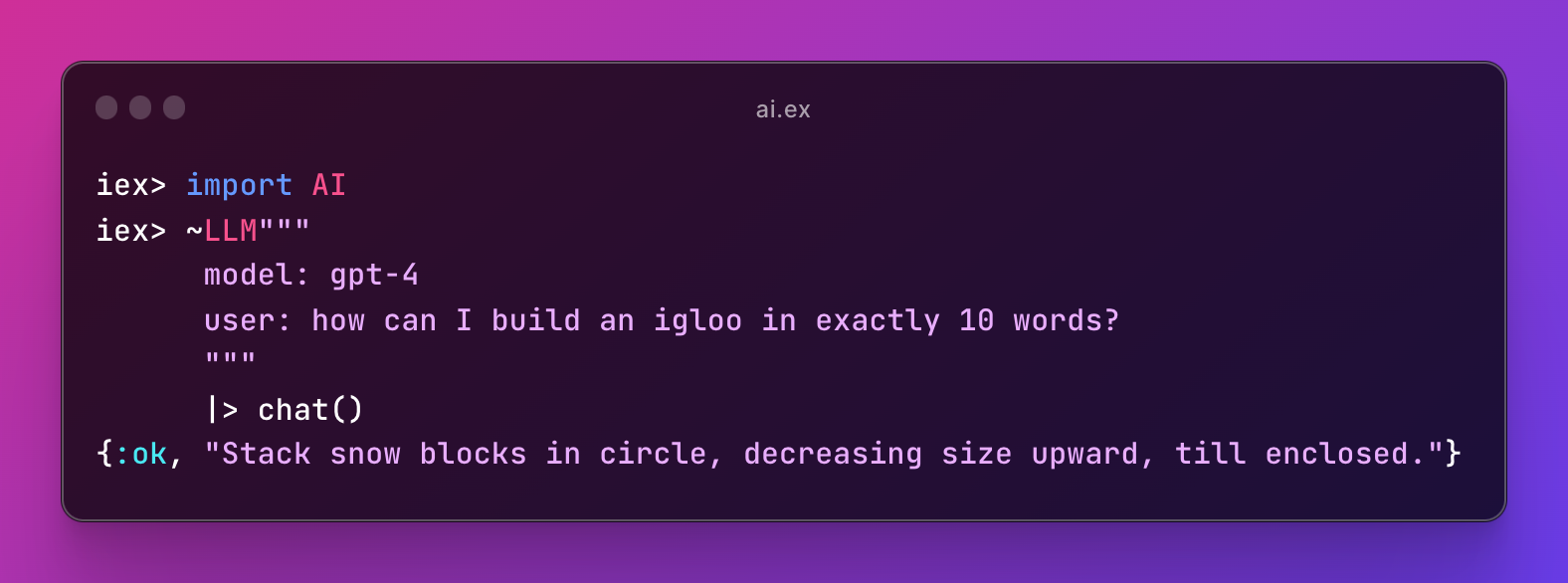

Then you can import AI and the ~LLM sigil and chat/1 function will be available to you. Many more features incoming.

iex> import AI

iex> ~LLM"model: gpt-4 user: hello world" |> chat()

{:ok, "Hello! How can I assist you today?"}

How does it work?

The implementation for ~LLM is quite simple. It was, of course, mostly written by GPT-4. Here's the code:

def sigil_LLM(lines, _opts) do

lines |> text_to_prompts()

end

defp text_to_prompts(text) when is_binary(text) do

model = extract_model(text) |> String.trim()

messages = extract_messages(text)

[model: model, messages: messages]

end

defp extract_model(text) do

extract_value_after_keyword(text, "model:")

end

defp extract_messages(text) do

keywords = ["system:", "user:", "assistant:"]

Enum.reduce_while(keywords, [], fn keyword, acc ->

case extract_value_after_keyword(text, keyword) do

nil ->

{:cont, acc}

value ->

role = String.trim(keyword, ":")

acc = acc ++ [%{role: role, content: String.trim(value)}]

{:cont, acc}

end

end)

end

defp extract_value_after_keyword(text, keyword) do

pattern = ~r/#{keyword}\s*(.*?)(?=model:|system:|user:|assistant:|$)/s

case Regex.run(pattern, text) do

[_, value] -> value

_ -> nil

end

end

Thanks for reading! I'll have more to say on using AI in Elixir apps soon. For more updates, check out my X.